Research is repeatable if we can re-run the researchers' experiment using the same method in the same environment and obtain the same results. A prerequisite for repeatability is for the research artifacts that back up the published results to be shared. Sharing for repeatability is essential to ensure that colleagues and reviewers can evaluate our results based on accurate and complete evidence.

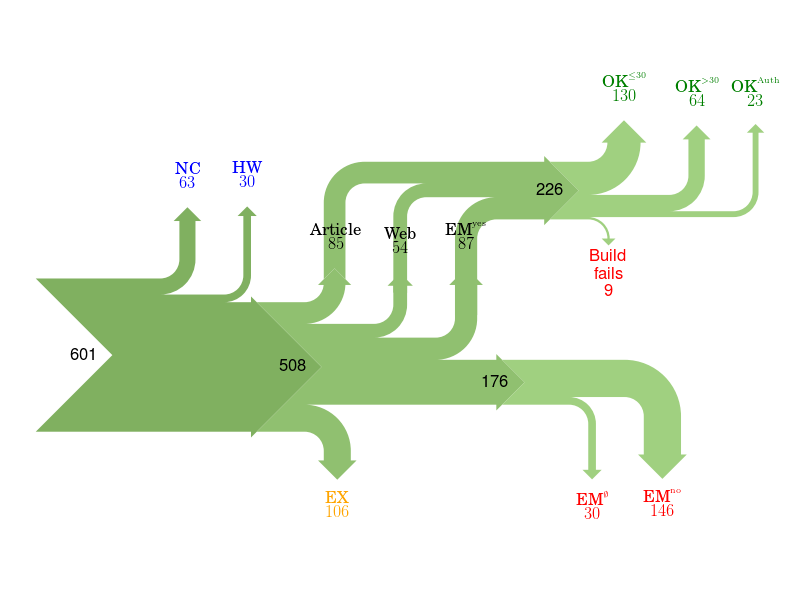

In this study, we examine the extent to which Computer Systems researchers share their research artifacts (source code), and the extent to which shared code builds. We refer to this as weak repeatability. In our study we examined 601 papers from ACM conferences and journals, attempted to locate any source code that backed up the published results, and, if found, tried to build the code.